The Future of NLP from the perspective of Language Models.

Language Neural Networks Models Series - Part 1 of

Welcome to the fascinating world of Natural Language Processing (NLP)! NLP is a subfield of artificial intelligence (AI) that focuses on the interaction between humans and computers using natural language. It encompasses a wide range of tasks, from understanding and interpreting human language to generating human-like responses. One of the key components that powers many NLP applications is the concept of Language Models.

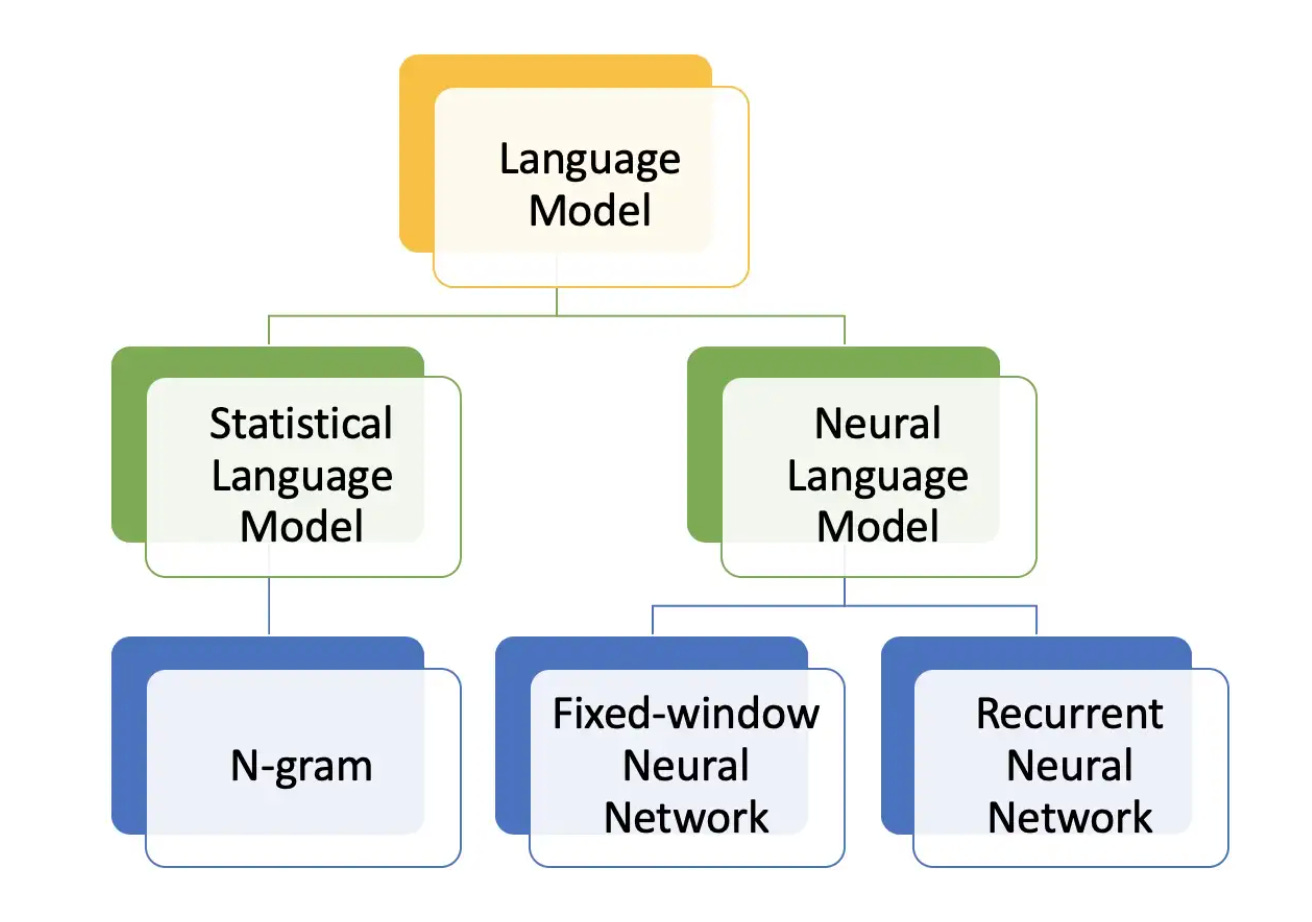

I believe that in the coming few years, I expect to see LLMs being used in a variety of new and innovative ways to improve our ability to learn and adapt to new information and situations. At its core, a Language Model is a statistical model that learns the probabilities of word sequences in a given language. In simple terms, it tries to understand the structure and patterns of a language by analyzing large amounts of text data. It is essentially a probabilistic representation of a language's grammar and vocabulary, enabling computers to predict the likelihood of a particular word or sequence of words in a given context. This enables the model to generate coherent and contextually relevant text, making it a fundamental building block for various NLP tasks.

They are trained on large corpora of text data, and they learn the probability distribution of words in the language.

The mathematical foundation of language models is probability theory.

In the context of language models, we are interested in the probability of a particular word occurring in a sequence.

The cross-entropy function is a measure of the difference between the predicted probability distribution and the actual probability distribution. The lower the cross-entropy, the better the language model is at predicting the next word.

Language models are fundamental to various NLP tasks, including machine translation, sentiment analysis, text generation, speech recognition, and more. As language models evolve and become more sophisticated, they empower us to build increasingly powerful and context-aware NLP applications.

Keep reading with a 7-day free trial

Subscribe to STJayaprakash's AI-Aqua Substack to keep reading this post and get 7 days of free access to the full post archives.